FSLint

Duplicates

One of the most commonly used features of FSlint is the ability to find duplicate files. The easiest way to remove lint from a hard drive is to discard any duplicate files that may exist. Often a computer user may not know that they have four, five, or more copies of the exact same song in their music collection under different names or directories. Any file type whether it be music, photos, or work documents can easily be copied and replicated on your computer. As the duplicates are collected, they eat away at the available hard drive space. The first menu option offered by FSlint allows you to find and remove these duplicate files.

Graphical Interface

⁞

⁞

The 'Duplicates' tab on the left hand side of the screen is the default tab selected at FSlint start up. The algorithm used to determine if a file is a duplicate of another is very thorough to minimize any possible false positives that may lead to data loss. FSlint scans the files and filters out files of different sizes. Any remaining files of the exact same size are then checked to ensure they are not hard linked. A hard linked file could have been created on a previous search should the user have chosen to 'Merge' the findings. Once FSlint is sure the file is not hard linked, it checks various signatures of the file using md5sum. To guard against md5sum collisions, FSlint will re-check signatures of any remaining files using sha1sum checks.

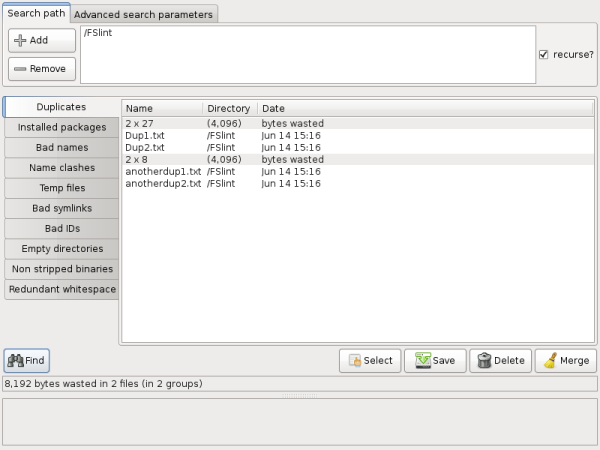

The 'Duplicates' interface is very simple. After the user has verified the 'Search path' location that they wish to search, they can simply click the 'Find' button on the lower left of the screen. When the process has finished the results of the found duplicate files are displayed in the central portion of the screen. All of the duplicate files will be grouped together under a grey bar giving information such as how many files are in the group and the number of bytes wasted in duplicate files. The files themselves are listed below the grey divider by their name, directory, and last modification date. Listed directly below the 'Find' button is the total number of bytes wasted in the total number of files and total number of groups.

Command Line Interface

The command line interface to this utility is 'findup'. This utility will be found in the installation directory of FSlint.

$/usr/share/fslint/fslint/findup --help find dUPlicate files. Usage: findup [[[-t [-m|-d]] | [--summary]] [-r] [-f] paths(s) ...] If no path(s) specified then the current directory is assumed. When -m is specified any found duplicates will be merged (using hardlinks). When -d is specified any found duplicates will be deleted (leaving just 1). When -t is specfied, only report what -m or -d would do. When --summary is specified change output format to include file sizes. You can also pipe this summary format to /usr/share/fslint/fslint/fstool/dupwaste to get a total of the wastage due to duplicates. Examples: search for duplicates in current directory and below findup or findup . search for duplicates in current directory and below listing the files full path findup -f search for duplicates in all linux source directories and merge using hardlinks findup -m /usr/src/linux* same as above but don't look in subdirectories findup -r . search for duplicates in /usr/bin findup /usr/bin search in multiple directories but not their subdirectories findup -r /usr/bin /bin /usr/sbin /sbin search for duplicates in $PATH findup `/usr/share/fslint/fslint/supprt/getffp` search system for duplicate files over 100K in size findup / -size +100k search only my files (that I own and are in my home dir) findup ~ -user `id -u` search system for duplicate files belonging to roger findup / -user `id -u roger`